SVM(Support Vector Machine)

SVM(Support Vector Machine)

SVM tries to find a decision boundary (hyperplane) that is as wide as possible, keeping the data points from both classes as far from the boundary line as they can be.

I. Core Formulas and Mathematical Concepts of SVM (Basics)

1. Objective Function of Linear SVM (Optimization Problem)

We aim to find a hyperplane defined by the equation:

Where:

- : the normal vector (determines the orientation of the hyperplane)

- : the bias term (determines the position of the hyperplane)

- : the input data point

Classification Decision Rule:

- If , the sample is predicted as class +1; If , the sample is predicted as class -1.

2. Objective Function for Maximizing the Margin

The core idea of SVM is to maximize the classification margin.

This margin is defined by the following formula:

Therefore, our problem becomes an optimization problem:

- This is a typical constrained convex optimization problem and the goal is to minimize , which in turn maximizes the margin.

3. Introducing Slack Variables (Soft Margin) — Allowing Misclassifications

In real-world datasets, perfect linear separability is often not possible. To handle this, we introduce slack variables to relax the constraints:

is the regularization parameter that controls the trade-off between maximizing the margin and minimizing the classification error. A larger means less tolerance for misclassification, leading to a harder margin, while a smaller allows more misclassifications and results in a softer margin.

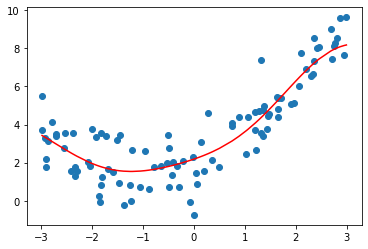

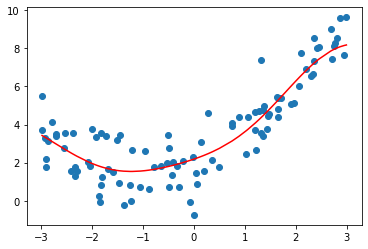

4. Kernel Trick — Handling Nonlinear Problems

When the data is not linearly separable, SVM uses kernel functions to map the original features into a higher-dimensional space, where the data becomes linearly separable.

The choice of kernel function plays a crucial role in the performance of an SVM model. It largely depends on the characteristics of the data and the type of decision boundary you expect.

| Data Characteristics | Recommended Kernel |

|---|---|

| Low-dimensional and linearly separable | Linear Kernel |

| Complex structures (e.g., images, text) | RBF Kernel (default and most commonly used) |

| Clear polynomial relationships between features | Polynomial Kernel |

| Want to simulate neural network behavior | Sigmoid Kernel (less commonly used) |

With kernel functions, we no longer need to explicitly compute the high-dimensional mapping. Instead, we directly use the kernel function to calculate the inner product in the transformed space.

(For more details on Kernel trick and inner products, see: Question 3: What is the mathematical definition of a kernel function?)

II. Implementing SVM in Python (Using scikit-learn)

I will show you how to implement an SVM classifier using scikit-learn.

1. Import the Required Libraries

1 | |

2. Load the Data

1 | |

3. Build and Train the Model

1 | |

Parameter Explanations:

-

kernel : the type of kernel function (e.g.,'linear','poly','rbf') -

epsilon : the tolerance margin within which errors are not penalized -

gamma : parameter for the RBF kernel; a larger value makes the model more complex (default:'scale') -

C : regularization parameter that controls the trade-off between model complexity and error minimization (default:1)

Model Evaluation

1 | |

III. Suitable Use Cases

SVM is particularly well-suited for the following scenarios:

- Small datasets with high feature dimensionality (e.g., image recognition, text classification)

- Clear but non-linear decision boundaries between classes

- Situations where good classification performance is needed even with limited training samples

For example: If you want to distinguish between images of cats and dogs, SVM can be very effective — especially when each image has many pixels (high-dimensional features), but the number of samples is relatively small.